ABOUT KNIME:

KNIME

(pronounced /naɪm/), the Konstanz Information Miner, is an open source data

analytics, reporting and integration platform. KNIME integrates various

components for machine learning and data mining through its modular data

pipelining concept. A graphical user interface allows assembly of nodes for

data pre-processing (ETL: Extraction, Transformation, Loading), for modelling

and data analysis and visualization. To some extent KNIME can be considered as

SAS alternative.

KNIME

allows users to visually create data flows (or pipelines), selectively execute

some or all analysis steps, and later inspect the results, models, and

interactive views. KNIME is written in Java and based on Eclipse and makes use

of its extension mechanism to add plugins providing additional functionality.

The core version already includes hundreds of modules for data integration

(file I/O, database nodes supporting all common database management systems

through JDBC), data transformation (filter, converter, combiner) as well as the

commonly used methods for data analysis and visualization. With the free Report

Designer extension, KNIME workflows can be used as data sets to create report

templates that can be exported to document formats like doc, ppt, xls, pdf and

others.

CAPABILITIES OF KNIME:

KNIMEs

core-architecture allows processing of large data volumes that are only limited

by the available hard disk space (most other open source data analysis tools

work in main memory and are therefore limited to the available RAM). E.g. KNIME

allows analysis of 300 million customer addresses, 20 million cell images and

10 million molecular structures.

Additional

plugins allows the integration of methods for Text mining, Image mining, as

well as time series analysis.

KNIME

integrates various other open-source projects, e.g. machine learning algorithms

from Weka, the statistics package R project, as well as LIBSVM, JFreeChart,

ImageJ etc.

KNIME

is implemented in Java but also allows for wrappers calling other code in

addition to providing nodes that allow to run Java, Python, Perl and other code

fragments.

COMPARISON:

Python: With origination

as an open source scripting language, Python usage has grown over time. Today,

it sports libraries (numpy, scipy and matplotlib) and functions for almost any

statistical operation / model building you may want to do. Since introduction

of pandas, it has become very strong in operations on structured data.

Python is a programming

language that is popularly used for data mining types of tasks. Programming

languages require you give the computer very detailed, step-by-step

instructions of what to do. Memorizing those programming statements is a good

deal of what "learning to program" consists of. You can use its

add-on packages to minimize your programming effort, but you're still doing

some programming.

SAS: SAS has been the

undisputed market leader in commercial analytics space. The software offers

huge array of statistical functions, has good GUI (Enterprise Guide &

Miner) for people to learn quickly and provides awesome technical support.

However, it ends up being the most expensive option and is not always enriched

with latest statistical functions.

R: R is the Open source counterpart of SAS, which has

traditionally been used in academics and research. Because of its open source

nature, latest techniques get released quickly. There is a lot of documentation

available over the internet and it is a very cost-effective option. R is easy

to get started too, but needs around a week of initial reading, before you get

started

KNIME is primarily

workflow-based packages that try to give you most of the flexibility and power

of programming without having to know how to program. Their workflow style is

easy to use by dragging and dropping icons onto a drawing window that represent

steps of the analysis. What each icon does is controlled by dialog boxes rather

than having to remember commands. When finished, the workflow

1) accomplishes the tasks,

2) documents the steps for reproducibility,

3) shows you the big picture of what was done and

4) allows you to reuse the the steps on new sets of

data without resorting to any underlying programming code (as menu-based user

interfaces such as SPSS often require.)

A

particularly nice feature of KNIME is that it allow’s you to add nodes to your

workflow that contain custom programming. This allows you to combine the two

approaches, making the most of each.

NOTE:

Knime

needs a basic understanding of the dataset and some logical thinking before

getting into analysis. It helps to make our work much easier for analysis

rather than remembering the algorithms.

Iris flower data set

The

Iris flower data set or Fisher's Iris data set is a multivariate data set

introduced by the British statistician and biologist Ronald Fisher in his 1936

paper The use of multiple measurements in taxonomic problems as an example of linear

discriminant analysis. It is sometimes called Anderson's Iris data set because

Edgar Anderson collected the data to quantify the morphologic variation of Iris

flowers of three related species. Two of the three species were collected in

the Gaspé Peninsula "all from the same pasture, and picked on the same day

and measured at the same time by the same person with the same apparatus".

The

data set consists of 50 samples from each of three species of Iris (Iris

setosa, Iris virginica and Iris versicolor). Four features were measured from

each sample: the length and the width of the sepals and petals, in centimetres.

Based on the combination of these four features, Fisher developed a linear

discriminant model to distinguish the species from each other.

KNIME ANALYTICS PLATFORM

Using

the the simple regression tree for regression in knime analytics platform.

Node Repository:

The

node repository contains all KNIME nodes ordered in categories. A category can

contain another category, for example, the Read category is a subcategory of

the IO category. Nodes are added from the repository to the workflow editor by

dragging them to the workflow editor. Selecting a category displays all

contained nodes in the node description view; selecting a node displays the

help for this node. If you know the name of a node you can enter parts of the

name into the search box of the node repository. As you type, all nodes are

filtered immediately to those that contain the entered text in their names:

Drag

and drop the file reader from the node repository into the workflow Editor.

Workflow Editor

The

workflow editor is used to assemble workflows, configure and execute nodes, inspect

the results and explore your data. This section describes the interactions possible

within the editor.

File Reader:

This

node can be used to read data from an ASCII file or URL location. It can be

configured to read various formats. When you open the node's configuration

dialog and provide a filename, it tries to guess the reader's settings by

analyzing the content of the file. Check the results of these settings in the

preview table. If the data shown is not correct or an error is reported, you

can adjust the settings manually .

The

file analysis runs in the background and can be cut short by clicking the

"Quick scan", which shows if the analysis takes longer. In this case

the file is not analyzed completely, but only the first fifty lines are taken

into account. It could happen then, that the preview appears looking fine, but

the execution of the File Reader fails, when it reads the lines it didn't

analyze. Thus it is recommended you check the settings, when you cut an

analysis short. Load

the iris data set into the file reader by configure.

Configure:

When

a node is dragged to the workflow editor or is connected, it usually shows the

red status light indicating that it needs to be configured, i.e. the dialog has

to be opened. This can be done by either double-clicking the node or by

right-clicking the node to open the context menu. The first entry of the

context menu is "Configure", which opens the dialog. If the node is

selected you can also choose the related button from the toolbar above the

editor. The button looks like the icon next to the context menu entry.

Partitioning:

Then

drag and drop partitioning node from the node repository into the workflow

editor. This is done to divide the iris data set into training and testing. The

input table is split into two partitions (i.e. row-wise), e.g. train and test

data. The two partitions are available at the two output ports. The following

options are available in the dialog.

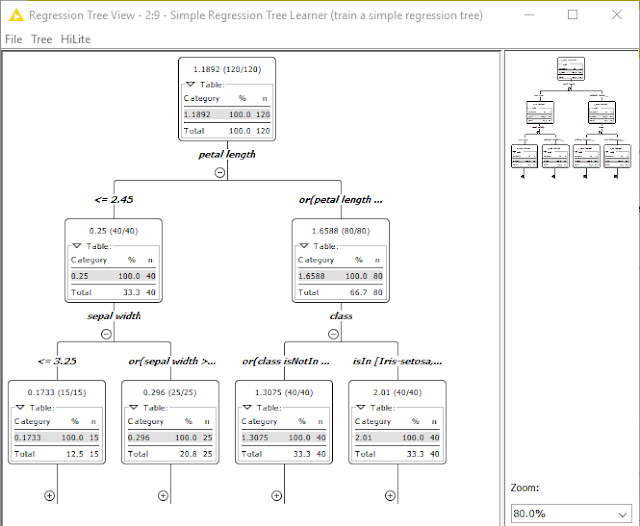

Simple Regression Tree Learner:

Drag

and drop simple regression tree learner into the workflow editor. It learns a single regression tree. The procedure follows

the algorithm described in CART ("Classification and Regression

Trees", Breiman et al, 1984), whereby the current implementation applies a

couple of simplifications, e.g. no pruning, not necessarily binary trees, etc.

The

currently used missing value handling also differs from the one used in CART.

In each split the algorithm tries to find the best direction for missing values

by sending them in each direction and selecting the one that yields the best result

(i.e. largest gain). The procedure is adapted from the well known XGBoost

algorithm and is described

Simple Regression Tree Predictor:

Applies

regression from a regression tree model by using the mean of the records in the

corresponding child node. Drag and drop simple regression tree predictor from

the node repository into the workflow editor.

Column Filter:

This

node allows columns to be filtered from the input table while only the

remaining columns are passed to the output table. Within the dialog, columns

can be moved between the Include and Exclude list Drag and drop column filter

from the node repository into the workflow editor.

Line Plot:

Plots

the numeric columns of the input table as lines. All values are mapped to a

single y coordinate. This may distort the visualization if the difference of

the values in the columns is large.

Only

columns with a valid domain are available in this view. Make sure that the

predecessor node is executed or set the domain with the Domain Calculator

node!. Drag and drop line plot from the node repository into the workflow

editor.

Numeric Scorer:

This

node computes certain statistics between the a numeric column's values (ri) and

predicted (pi) values. It computes R²=1-SSres/SStot=1-Σ(pi-ri)²/Σ(ri-1/n*Σri)²

(can be negative!), mean absolute error (1/n*Σ|pi-ri|), mean squared error

(1/n*Σ(pi-ri)²), root mean squared error (sqrt(1/n*Σ(pi-ri)²)), and mean signed

difference (1/n*Σ(pi-ri)). The computed values can be inspected in the node's

view and/or further processed using the output table. Drag and drop Numeric

Scorer from the node repository into the workflow editor.

Connection of all the nodes in the

workflow editor to perform simple linear regression:

Connections:

You

can connect two nodes by dragging the mouse from the out-port of one node to

the in-port of another node. Loops are not permitted. If a node is already

connected you can replace the existing connection by dragging a new connection

onto it. If the node is already connected you will be asked to confirm the

resulting reset of the target node. You can also drag the end of an existing

connection to a new in-port (either of the same node or to a different node).

Execute:

In

the next step, you probably want to execute the node, i.e. you want the node to

actually perform its task on the data. To achieve this right-click the node in order

to open the context menu and select "Execute". You can also choose

the related button from the toolbar. The button looks like the icon next to the

context menu entry. It is not necessary to execute every single node: if you

execute the last node of connected but not yet executed nodes, all predecessor

nodes will be executed before the last node is executed.

Execute All:

In

the toolbar above the editor there is also a button to execute all not yet

executed nodes on the workflow.

This

also works if a node in the flow is lit with the red status light due to

missing information in the predecessor node. When the predecessor node is

executed and the node with the red status light can apply its settings it is

executed as well as its successors. The underlying workflow manager also tries

to execute branches of the workflow in parallel.

Execute and Open View:

The

node context menu also contains the "Execute and open view" option.

This executes the node and immediately opens the view. If a node has more than

one views only the first view is opened.

In

workflow editor when you try to view Partitioning node you can see First

partition as training data of iris data set which contains 80% and Second

partition of testing data of iris data set which contains 20%.

In

workflow editor when you try to view Simple Regression tree node you can see

the tabulated form of decision tree which can be increased and observed when

you click on positive symbol (+) and the chart decreases when you click on

negative symbol (-) you can also adjust the zoom in or zoom out range from 60%

to 120%.

In

workflow editor when you try to view Simple Regression Tree Predictor it

displays the predicted value as output from the testing data set.

In workflow editor when you try to view column Filter you can see that the other columns are

been filtered and removed , only the petal width and prediction petal (petal

width) is kept which will be used to plot an line graph.

In

workflow editor when you try to view line plot. You can see that it draws a

line plot to visualize the performance of the simple regression tree

Fit

to size by clicking that you can fit the plot within the screen to have an

better visualization. You can also change the colour by clicking background

colour.

In

workflow editor when you try to view number score node. It displays an

statistical calculation of score prediction.

INTERPRETATION:

The

mean absolute error to prediction (petal width) is 0.147. In statistics, the mean absolute error (MAE) is a

quantity used to measure how close forecasts or predictions are to the eventual

outcomes. In statistics, the mean squared error (MSE) or mean squared deviation

(MSD) of an estimator (of a procedure for estimating an unobserved quantity)

measures the average of the squares of the errors or deviations—that is, the

difference between the estimator and what is estimated. MSE is a risk function,

corresponding to the expected value of the squared error loss or quadratic

loss. The difference occurs because of randomness or because the estimator

doesn't account for information that could produce a more accurate estimate. The

MSE is a measure of the quality of an estimator—it is always non-negative, and

values closer to zero are better. Here the MSE is 0.04. The root-mean-square deviation (RMSD) or

root-mean-square error (RMSE) is a frequently used measure of the differences

between values (sample and population values) predicted by a model or an

estimator and the values actually observed. The RMSD represents the sample

standard deviation of the differences between predicted values and observed

values. These individual differences are called residuals when the calculations

are performed over the data sample that was used for estimation, and are called

prediction errors when computed out-of-sample. The RMSD serves to aggregate the

magnitudes of the errors in predictions for various times into a single measure

of predictive power. RMSD is a measure of accuracy, to compare forecasting

errors of different models for a particular data and not between datasets, as

it is scale-dependent. Although RMSE is one of the most commonly reported

measures of disagreement, RMSD is the square root of the average of squared

errors, thus RMSD confounds information concerning average error with

information concerning variation in the errors. The effect of each error on

RMSD is proportional to the size of the squared error thus larger errors have a

disproportionately large effect on RMSD. Consequently, RMSD is sensitive to

outliers.

CONCLUSION:

KNIME allows users to visually create data

flows (or pipelines), selectively execute some or all analysis steps, and later

inspect the results, models, and interactive views. KNIME is written in Java

and based on Eclipse and makes use of its extension mechanism to add plugins

providing additional functionality. The core version already includes hundreds

of modules for data integration (file I/O, database nodes supporting all common

database management systems through JDBC), data transformation (filter, converter,

combiner) as well as the commonly used methods for data analysis and

visualization. With the free Report Designer extension, KNIME workflows can be

used as data sets to create report templates that can be exported to document

formats like doc, ppt, xls, pdf and others.

KNIMEs

core-architecture allows processing of large data volumes that are only limited

by the available hard disk space (most other open source data analysis tools

work in main memory and are therefore limited to the available RAM). E.g. KNIME

allows analysis of 300 million customer addresses, 20 million cell images and

10 million molecular structures. Additional

plugins allows the integration of methods for Text mining, Image mining, as

well as time series analysis.

KNIME

integrates various other open-source projects, e.g. machine learning algorithms

from Weka, the statistics package R project, as well as LIBSVM, JFreeChart,

ImageJ, and the Chemistry Development Kit. KNIME is implemented in Java but

also allows for wrappers calling other code in addition to providing nodes that

allow to run Java, Python, Perl and other code fragments.

Overall,

this is a very sophisticated and professional piece of software. Because of its

flexibility, it is nowadays our chief cheminformatics workhorse, and voting

with one’s feet is surely the best possible endorsement. The KNIME philosophy

and business model of mixed commercial and free (but Open) software, allows its

continued improvement while making it freely available to desktop users. Some

minor gripes relate to the fact that it seems only to read but not write .xlsx

files—we are confident that someone will write a node to let it do so soon.

There is a substantial community of users, increasing all the time, and many

training schools and the like. Because of this, I think it will continue to

grow in popularity. It is well worth a look for the GP community.

AUTHOR:G.POONURAJ NADAR

Comments

Post a Comment